Meta’s highest executives neglected to help prevent sexual harassment of children on its platforms, says former engineer(35)

2025.11.11 15:42 Mariko Tsuji

Despite study results showing frequent occurrences of sexual harassment of minors, Meta executives did not act on its own team’s findings.

Riley Basford, a resident of New York, USA, took his own life at age 15 after being blackmailed for money based on sexual images of himself. A criminal gang based in Nigeria had approached him on Facebook via a fake account.

His mother, Mary Rodee, has been calling for legislation to regulate online platforms in order to prevent further children from losing their lives. She and other bereaved parents protested in front of Meta’s headquarters and have been lobbying lawmakers.

However, Mary has never received a personal apology from Meta regarding her son’s death.

Does Meta really intend to prevent the sexual exploitation of children and the related crime occurring on its platforms?

“By their actions, you know that they’re not prioritizing [it],” Arturo Bejar, a former Meta engineer, told Tansa.

Arturo Bejar being interviewed. Photo taken on April 2, 2024, by Mariko Tsuji.

Helping minors report harassment to Meta

Bejar worked for Meta for six years, from 2009 to 2015. He oversaw the department in charge of user protection and mainly worked to ensure the platform didn’t facilitate bullying and suicide among children. In particular, he emphasized building tools to make it easier for users to communicate problems and harm to Meta.

For example, if a user was hurt or disturbed by an interaction on the platform, they had the option to report “I was bullied or harassed” when describing the situation to Meta. However, teenagers did not submit this kind of report very often.

Therefore, Bejar and his team decided to use language closer to what children actually experience.

“When we asked teenagers what they were experiencing, they were like, ‘Oh, someone is making fun of me. Somebody is spreading rumors.’ And we found that if you use their language, then teenagers would select the [corresponding] option” to report such behavior, Bejar explained.

“There’s not an option where a young person can say, ‘Oh, this is making me uncomfortable. This is inappropriate. This is sexually suggestive. This is asking me to do these things.’ And without that option, then how do you even begin to make it safe for kids?” he continued.

Sexual message sent to his daughter

Bejar left Meta in 2015. By that time, Meta had grown into a social media giant, operating platforms like Instagram and WhatsApp in addition to Facebook.

A few years later, his daughter, then 14, started using Instagram.

One day, she received a message from a (seemingly) man’s account, asking her to perform a sexual act. In addition, when she posted a video of herself and a car — cars were shared hobby for Bejar and his daughter — she received comments making fun of her body and sexist remarks such as “Women should stay in the kitchen.”

Bejar told his daughter to report such comments to Meta. Although she did so using Instagram’s reporting function, the situation didn’t change.

“She would either not get a response or, when she did get a response, every single time it was, ‘Yeah, we can’t help you with that. Sorry. [The act you you reported] doesn’t break our community guidelines.’” Bejar said.

Eventually, his daughter gave up reporting unwelcome comments. Many of her friends had similar experiences.

Bejar decided to return to Meta as a consultant, partially because of his daughter’s online experiences, which he had seen up close. However, there was another reason for his feeling that things had to change.“

I had been talking to people [who worked for] Instagram and found out that a lot of the work that had been done earlier, like three or four years earlier, had been removed, and people didn’t even remember that it had been done,” Bejar explained.

In 2019, he joined Instagram’s Wellbeing Team, which works on user protection.

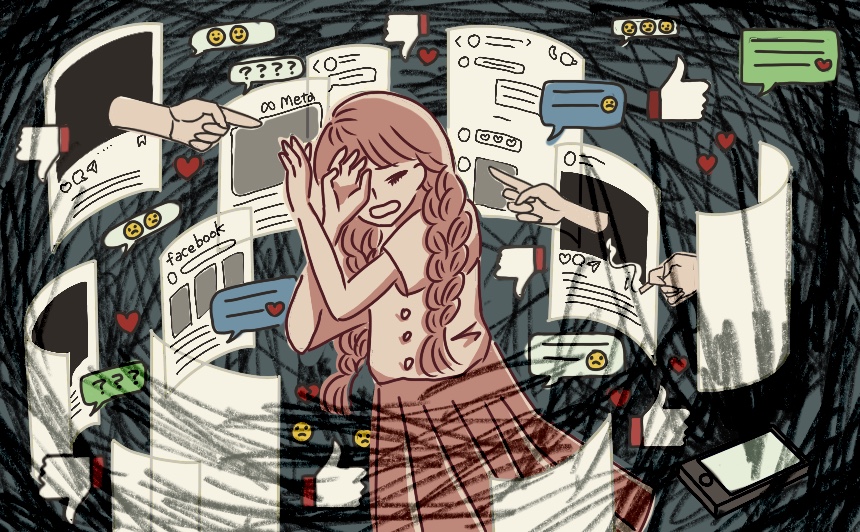

(Illustration by qnel)

One in eight children 13–15 experience unwanted sexual advances

Shortly after returning to the company, Bejar began to feel that management wasn’t prioritizing user protection.

“At first, the idea was ‘Why don’t you set a goal based on the harm that people are experiencing?’ And you try to put that in your monthly goals or your quarterly goals, and it wouldn’t happen. And when it went to review by senior executives, those goals would disappear,” he recalled.

Bejar felt he needed enough data to convince management of the seriousness of the situation. “Meta is a company that’s managed by data. Every decision is made by data,” he explained.

He therefore decided to conduct a study.

“I realized that what was needed was a study that captured the harm that people were experiencing on the platform, that was done in a very deliberate, careful, and vetted way, such that it would be an accurate representation of everybody who uses Instagram,” Bejar said.

“The BEEF survey is called that because it’s the Bad Experiences and Encounters Framework. The idea was to be able to capture the different kinds of bad experiences that everybody worldwide had on Instagram, and to get information not only about the experience that they had, but how old they were, and what they thought to do when they had those experiences.”

The survey — compiling 270,000 responses — found that minors frequently had uncomfortable experiences using Instagram.

For example, among respondents, 13% of children 13 to 15 years of age had received an unwanted sexual advance within the past week.

“It’s tragic,” Bejar said. “How come we live in a world where one in eight kids gets sexually harassed in the last seven days? It’s the largest-scale sexual harassment in the world of minors.”

Similarly, 26% of children 13 to 15 years of age said they had seen discrimination on the basis of gender, religion, or race on Instagram in the past seven days. Eleven percent said they had been bullied, ostracized, or gossiped about.

“It’s eminently preventable. It’s something that’s very easy for them to address …You can never eradicate it, but it should be a fraction of a percent,” Bejar said.

“And it should be as close to zero as you can make anything that involves humans and people, and to create an environment where respect is the norm, right?”

Study results not even allowed to be shared internally

In fall 2021, Bejar shared the results of his investigation with Meta’s management team by email. It was addressed to the highest executives, known internally as the “M-Team.”

The M-Team included Meta CEO Mark Zuckerberg; Sheryl Sandberg, Facebook’s first female executive and the COO; Adam Mosseri, head of Instagram; and Chris Cox, Meta’s CPO and who oversees all products. In addition to sharing the results of his study, Bejar also explained the sexual harassment his own daughter had experienced.

“Sheryl Sandberg wrote back, ‘I’m so sorry that your daughter was experiencing these things. I know what it’s like to be a woman that gets told to get back to the kitchen, and it’s absolutely awful,’ and then [she] did not follow up,” Bejar said.

“And then Mark didn’t even reply to the email,” he added.

Bejar spoke directly with Mosseri and Cox. They said they were well aware of how many children had already been affected. But they never took concrete action.

“They don’t care about the harm that teenagers are experiencing on their platform,” Bejar said.

“You saw it at the hearing. He [Mark Zuckerberg] turned around and apologized,” he continued, suggesting that Zuckerberg should have instead said “‘I want each of you parents to come into my office. I will spend time sitting down with you. I will ask my people to understand the harm that your child experienced, and I’m going to make changes to my product to prevent what can be prevented.’ It’s very simple, right? You don’t just turn around and say, ‘You should have never experienced this.’”

“By their actions, you know that they’re not prioritizing [protecting children] because all they needed to do when they came out [at the hearing] is to say ‘We are committed to reducing unwanted advances on kids,’” Bejar finished.

The M-Team did not even allow Bejar’s findings to be released internally. After discussions with Meta’s public relations and legal departments, he was asked to dilute the content and frame it as a mere hypothesis.

A copy of the email that Bejar sent of Meta’s M-Team, including Mark Zuckerberg. Image courtesy of Arturo Bejar.

“There’s nobody wanting to make kids safe”

In fall 2023, Bejar spoke to the Wall Street Journal about his experiences at Meta. At the same time, he joined the congressional hearings on online platforms as a witness.

Meta is “standing right there, and they could help. And that’s why I loved the work” to improve user safety, Bejar explained.

However, said he feels that “there’s nobody watching out for your kids right now. There’s nobody wanting to make them safe. There’s nobody trying to prevent these things from happening.”

“When I blew the whistle, I chose that I would never work on this industry again,” he said.

Currently, Bejar is calling for strengthened government regulation, including requiring greater transparency about the harm occurring on online platforms.

“If you think about any place where you entrust your kids — think of an arcade, think of a movie theater, think of all those businesses where you let your kids go play — if you knew that one in eight kids got sexually harassed in one of those places in the last seven days, who would you hold to account? Whose responsibility is it? It’s the leadership’s responsibility. It’s the CEO’s responsibility. And when the CEO will not step up to address it, it is government’s responsibility to protect our children,” he emphasized.

(Originally published on August 29, 2024.)

Uploaded and Re-Uploaded: All articles

Newsletter

signup

Newsletter

signup