30% of Requests for Deletion of Sexually Explicit Images Ignored (12)

2023.06.19 13:13 Mariko Tsuji

Under the current system, the onus is on victims to track down and report images they want deleted. In one instance, a victim paid over 2 million yen for a deletion service.

(Illustration by qnel)

“Video Share” and “Album Collection” are apps in which sexually explicit images are traded. Both have been listed on Google and Apple’s app stores and have been downloaded at least 100,000 times. The damage continues to grow.

Some victims, attempting to stop the spread of their image, ask the perpetrator who posted the image or online operator to remove the image.

However, the current legal system does not require perpetrators or businesses to remove such images. According to a nonprofit’s survey, 30% of those requesting removal do not have their request granted.

Victims must try to rectify the situation on their own and do not know if they will succeed. Legal gaps are putting victims in difficult situations.

“I didn’t know what to do”

Recently, another woman sent me a message asking for advice.

“I hesitated for days, but I didn’t know what to do, so I decided to contact you,” her message began.

The woman, who I’ll call C, said she had learned a few days earlier that her photos and videos were being traded on Album Collection. She purchased a folder containing her images to check the contents and found approximately 90 photos and a number of videos.

About two years ago, C started doing sex work that involved making video calls and chatting online with male customers. Although she also had a day job, the work was intended to supplement living expenses: Her husband had become ill and was unable to work, and the Covid pandemic made their financial situation even more difficult.

The images of C posted to Album Collection had originally been published as part of her online sex work. Some of them showed her face, and some showed her in her underwear.

Photos and videos that she had taken for a certain level of paying member were also included. The site where her images had been originally posted had been behind a paywall, and it was supposed to have had a system that prevented customers from taking screenshots or otherwise saving the images.

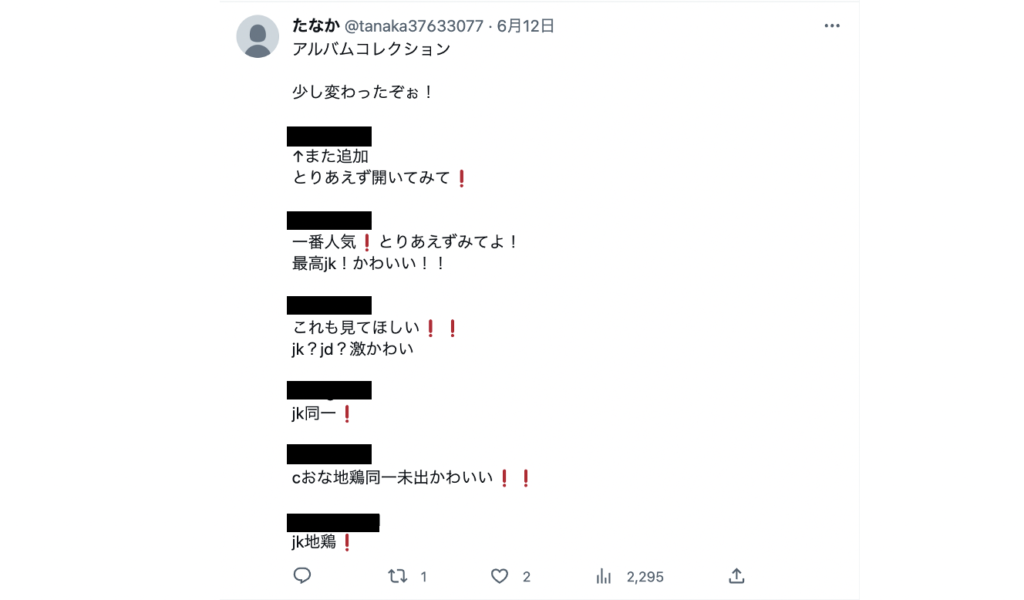

The photos also spread on Twitter. Several accounts that appeared to be Album Collection users tweeted pictures of C’s face and the password for the relevant folder. They advertised that the images were available for purchase on Album Collection.

C began searching every day for whether her image was being posted online. She also directly contacted the Twitter users who had posted her picture. Some deleted the posts at her request, but others ignored C’s message and continued to post them.

She sent a request for the images to be removed to the operator email address listed on Album Collection’s website. However, even once removed, the same images were soon reposted. There was no end. C told me she was afraid her relatives would find out and that she felt she couldn’t ask anyone for help.

“The images I requested were removed, but it’s still like playing wack-a-mole. I don’t know why that site hasn’t been taken down,” C said. “I feel so afraid when I think that the leaked images will be out there forever.”

Tweets promoting sexually explicit images are still being posted on Twitter. The “c” stands for junior high school student (chugakusei in Japanese), and “jk” stands for female high school student (joshi-kokosei in Japanese). The password that can be used to view the images in the app has been blacked out.

Even police say the only thing victims can do is repeatedly request images to be deleted

As I wrote in the first article of this series, my acquaintance A went to the police after her images were disseminated online. But the male police officer refused to help her.

“Speaking frankly, if it’s being spread like that online, it cannot be completely eliminated,” she told me he said. “There’s nothing for it but to request the photos and videos be deleted when you find them.”

However, once sexually explicit images are posted, they become a “commodity” and spread quickly because the posters want to make money off them. A and B’s imagers were saved by hundreds to thousands of people after being posted once. Those who saved them spread the images again to other places. It’s impossible for an individual to deal with something that has spread so far.

Ultimately, A also searched for her images and repeatedly requested that they be deleted. She discovered what had happened last summer — the images continue to spread to this day.

20,000 deletion requests in one year

PAPS is a nonprofit that provides support for victims of online sexual abuse. In addition to providing counseling for victims, they also make image removal requests on their behalf. Both services are free of charge.

PAPS chief director Kazuna Kanajiri said that victims are being made responsible for the harm caused to them.

“Under the current system, the victims themselves have to find the images and request their removal,” she said. “In order to find their images, they must also view a large number of sexual images of others on adult websites and message boards. This risks causing secondary stress and trauma.”

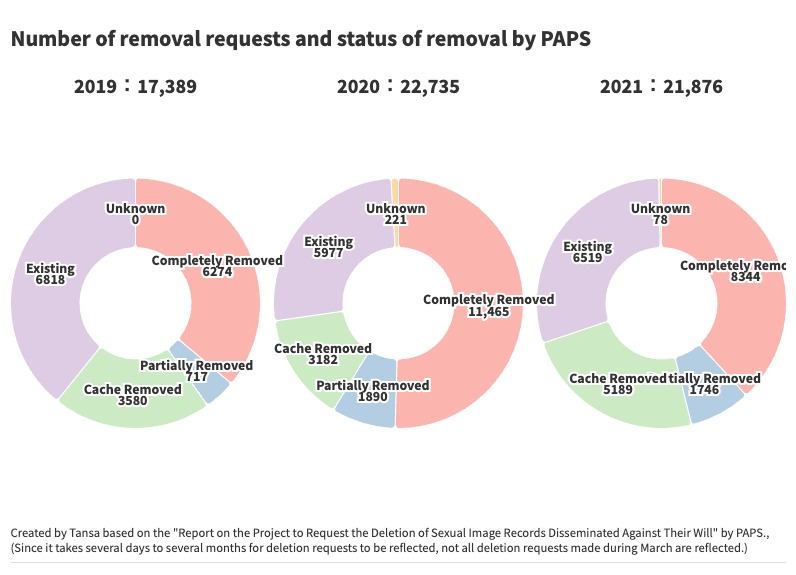

In fiscal year 2021, PAPS made approximately 20,000 image removal requests to website and social media platform operators, based on approximately 100 requests for help received per month.

When searching for images to request to remove, PAPS uses both manual checks by its staff and a system that automatically detects images similar to those of the victim. The system also records deletion requests. Every few days, it will check again to see whether the requested images have been deleted and whether similar posts have been made.

Victims do not have to search for their images themselves. However, although they can request a lawyer’s assistance or ask a private company to handle removal requests for them, images getting reposted makes this an expensive route. One victim paid a total of more than 2 million yen (about $14,300) to a company to have her images removed.

PAPS receives an increasing number of consultations every year, and the staff have their hands full. “Frankly, this is beyond the scope of what a single NPO can handle,” said Kanajiri.

The inefficacy of the “Provider Liability Limitation Act”

Individual victims are powerless to address the situation, and support organizations are stretched thin. But victims face an even harsher situation.

Even after a removal request is made, not all images get deleted. According to PAPS’ records from FY2021, 29.8% of requests failed to result in the images being deleted.

Images were completely removed in 38.1% of cases, while in 8% they were partially removed. In 23.7% of cases, the cache remaining on the server was deleted.

There are two reasons why images aren’t deleted even after a request.

One is that online operators and other digital platforms have no legal obligation to respond to removal requests.

The “Provider Liability Limitation Act” regulates operators’ response to deletion requests. This law applies not only to providers but also to individuals who operate online message boards.

Under this law, when an operator removes a requested image, the poster of said image will not be able to file a claim for damages if there is a clear violation of the requester’s human rights.

Ministry of Internal Affairs and Communications “aware there is strong public concern” but doesn’t take action

However, the law does not stipulate that operators must respond to removal requests. Failure to respond does not result in punishment or a request for further action.

“There is no legal basis” was the vague response I received from a member of the Ministry of Internal Affairs and Communications’ Consumer Affairs Unit 2, which deals with harmful and illegal information on the internet.

“We are aware there is strong public concern about such sites,” he said. “However, there is no legal basis for administrative guidance, and from the standpoint of freedom of expression, realistically it is difficult for the ministry to deeply intervene in individual sites and content.”

Over 40% of sites were U.S.-based

A second reason images don’t get removed is that many of the websites where they are posted are based outside Japan. These sites often do not respond to removal requests.

Of the adult websites PAPS contacted to request removals in FY2019 through FY2021, the largest number of requests were for sites based in the United States, which accounted for more than 40% of the total. This was two to four times higher than the number of requests they sent to sites based in Japan.

If a site’s server is located overseas, Japan’s domestic laws do not apply to it. In this case, even a police investigation would have difficulty confirming communication records and identifying the operator, explained Kou Shikata, a professor of law at Chuo University and a former National Police Agency bureaucrat, in this series’ seventh article.

In order to avoid investigation and liability, some companies rent servers in regions that have lax laws or are not signatories to relevant international treaties, a practice known as “offshore hosting.” These servers have become a hotbed of crime.

Even after sending deletion requests for three years

B and her relatives, who described the harm caused to B by digital sex crimes in the first article in this series, have been checking for new posts and requesting their removal for more than three years.

B’s photos and videos were initially spread on Twitter and then appeared on Video Share and Album Collection. B, like C, initially didn’t reach out for help, searching for the images and making deletion requests herself. However, feeling trapped and overwhelmed, B attempted suicide. Fortunately, she survived, but her offline life has also been affected: She received an un-postmarked letter at home informing her that the images had been leaked and was followed by someone on her way home.

The relatives and others who B asked for help divide the work between themselves and continue to check whether her images have been posted. Their daily surveillance covers various websites and social media platforms.

They will never have peace of mind.

For victims of digital sex crimes:

There is a dedicated consultation service for individuals whose sexually explicit images have been posted online without their permission or who have received threats or are being coerced based on such images.

One-Stop Support Center for Victims of Sexual Crimes and Sexual Violence: A consultation service for victims of sexual crimes and sexual violence that cooperates with relevant institutions such as obstetric and gynecological care providers, counseling, and legal consultation.

NPO PAPS: A consultation service for victims of online sexual violence such as revenge porn, hidden cameras, gravure and nude filming, and those involved in the adult video industry and sex industry.

Yorisoi Hotline: A hotline to consult by phone, chat message, or social media platforms with a professional counselor about sexual harm.

Internet Hotline Center: A place to report child sexual abuse images. The Center shares such information with the police and requests site administrators and others to take measures to prevent their transmission.

(Originally published in Japanese on June 13, 2023.)

Uploaded and Re-Uploaded: All articles

Newsletter signup

Newsletter signup